4 Things You Should Know When Choosing Proxies for Market Research

We live in an information age where technology is shaping how people interact with the world, and the same is true for businesses. A business that enters the market without conducting market research and market analysis may not be able to capture and maintain its market share. In this article, we will look at how to securely use web scraping using backconnect rotating proxies.

Web Scraping Use Cases

Market research is the process of collecting data for the purpose of understanding the market. The analysis of the collected data allows you to make key business decisions based on the needs of the client, the behavior of competitors, and current trends.

This data is collected from the internet using an automated method known as web scraping. This process involves extracting large amounts of data from websites using web scraping software and saving it to a file on a computer.

Survey on the Prices

Setting the right price that attracts customers while keeping your business profitable can be tricky. You need an accurate analysis of the prices your competitors are offering, which is only possible with web scraping on e-commerce sites and competitor sites.

Social Media Monitoring

Social media is a rich source of data on market reactions to your brand. By browsing social networking sites, you can collect posts with direct mentions of your brand. The analysis of this information allows you to get an idea of the market opportunities.

SEO Monitoring

Almost every business has a website, and to make your business visible on search engines, you need to optimize your keywords properly. Through web scraping, you can find what consumers are looking for on the internet and find the best-ranking keywords.

Generation of Leadership

Web scraping allows you to collect contacts of potential customers. The program will collect important data such as email addresses and phone numbers.

Competition Monitoring

You don’t just have to keep an eye on your competitors’ prices. You need to keep an eye on their marketing campaigns. You need to know where competitors are advertising their products and come up with strategies to keep up.

Why Use a Proxy for Web Scraping?

Web scraping requires the use of a proxy. There are several reasons for using intermediate servers.

The Ability to Bypass the Limits on the Number of Requests to the Site.

When a web page is updated a certain number of times, anti-fraud technology is triggered in the system. The resource perceives such activity as a DDoS attack. As a result, access to the page for the user is closed.

The parser makes a huge number of requests for a resource. There is a risk that at any time its activity may be interrupted due to the activation of anti-fraud technology. In order to successfully collect data, it is recommended to use even multiple IP addresses. It all depends on how many requests you need to make.

Bypass Scraping Protection on Some Resources.

Some sites use every possible means to protect themselves from scraping. Proxies allow you to effectively bypass this protection. For example, problems may arise when copying text. To bypass such an anti-fraud technology, they use a proxy of the same server on which the site is located. For example, you need to parse information from a British web resource with a British IP.

Keep Up with Market Trends.

Consumer tastes are unpredictable. You need to be alert. By using the right keywords, you can discover new interests in the market and improve your product creation to meet the needs of the market.

Website owners strive to prevent the extraction of IP addresses from their sites. They block any user feigning suspicious activity and this can interfere with your web scraping project. That’s why you need proxies.

A proxy will hide your IP address, allow access to geo-blocked sites and prevent site owners from discovering your scraping.

But you need the right proxy to achieve these benefits. Let’s consider the qualities of a great proxy.

Reliability.

You need a quality proxy server that provides a high level of anonymity and carries a low risk of detection. This ensures that you don’t get banned in the middle of your project. Residential proxies use the IP addresses of real existing devices that are provided by ISPs. They are also tied to a physical location, making it easy to access geo-blocked websites.

High Speed.

Speed is of great importance when scraping webs. Low connectivity and having pages that load slowly will waste your time and reduce the amount of workload you can handle at a given time. Datacenter proxies will provide you with the high speed you need. They don’t need an ISP or internet connection to work.

Confidentiality.

A private or dedicated proxy will be better than a shared proxy for web scraping. They have a high uptime which makes them reliable. Unlike a public proxy, where abuse by one user affects everyone using the proxy, a private proxy is reliable. Make sure the proxy you choose is a dedicated one.

Rotating Proxies.

Too many requests from the same IP address will raise suspicion. And with web scraping, you have to make numerous requests to get the data you need. Rotating proxies change the IP address with every request you make, reducing your chances of being detected.

Final Thoughts

With web scraping, you can get information that will help you grow your customer base, diversify your product range, set competitive prices, monitor competition, and optimize your website and social media for search engines. By understanding consumer needs and industry trends, you can create products that will penetrate the market. Using a proxy for market research with web scraping provides a competitive advantage for businesses. You can purchase a reliable private or rotating proxy from PrivateProxy.me, which has been providing state-of-the-art proxy server solutions for over 10 years. The company has successfully provided thousands of clients around the world with reliable proxies.

The Challenges and Benefits of Removing Negative Online…

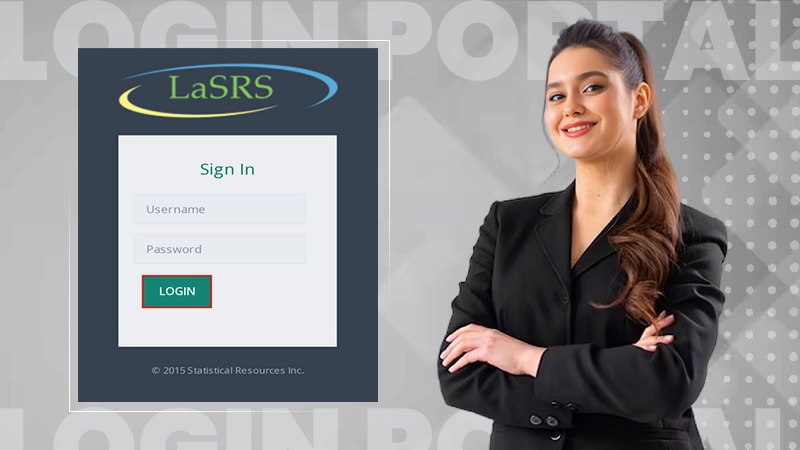

Unlock the Simplest Way to Access LaSRS Login…

Strategic Wins: How SafeOpt Can Boost Your Online…

5 Reasons Why Marketing Matters in Business?

Google Ads: What Are the Basic Checklists to…

The Crucial Role of Press Releases in a…

8 Best Tech Tips to Implement for Better…

Fax Machines in the Digital Age: A Sustainable…

Breaking Barriers: The Power of Business Translation Services

Why Do Businesses Need a Dedicated Mobile App?

The Role of Onboarding in Improving Employee Retention…

3 Major Benefits of Onsite IT Support